Top Software Testing Best Practices to Ensure Quality

Top Software Testing Best Practices to Ensure Quality

Building Better Software: A Guide to Essential Testing Practices

Software bugs are expensive. They erode user trust and impact your bottom line. This article presents nine software testing best practices to improve your software quality and development efficiency. Whether you're an experienced programmer or new to development, these actionable insights will help you build more robust and reliable applications.

This curated list provides practical guidance on implementing effective software testing strategies. You'll learn how to integrate these software testing best practices into your development workflow, regardless of your team's size or technical expertise. We'll explore key concepts with real-world examples and actionable steps, focusing on delivering immediate value and avoiding generic advice.

This article covers a range of critical software testing best practices, including:

- Test-Driven Development (TDD)

- Continuous Integration/Continuous Deployment (CI/CD) Testing

- Risk-Based Testing

- Test Automation Pyramid

- Shift-Left Testing

- Exploratory Testing

- Behavior-Driven Development (BDD)

- API Testing Best Practices

- Test Data Management

By understanding and implementing these software testing best practices, you can minimize defects, reduce development costs, and deliver higher quality software that meets user expectations. This, in turn, will contribute to a better user experience, increased customer satisfaction, and a stronger competitive edge in the market. Let's dive in.

1. Test-Driven Development (TDD)

Test-Driven Development (TDD) flips the traditional software development script. Instead of writing code and then testing it, TDD dictates writing the test before the code. This "test-first" approach forces developers to consider the desired behavior upfront, leading to more modular, testable, and robust code. The core process revolves around a "Red-Green-Refactor" cycle.

How TDD Works: The Red-Green-Refactor Cycle

- Red: Write a small, focused test that defines a specific piece of functionality. This test should initially fail, as the code to implement the functionality doesn't exist yet.

- Green: Write the minimum amount of code necessary to make the test pass. The focus here isn't on elegant code, but on satisfying the test conditions.

- Refactor: Improve and optimize both the test and production code, ensuring the test continues to pass. This stage addresses code quality, readability, and performance.

Real-World Examples of TDD

Companies like Spotify and Netflix leverage TDD for critical systems. Spotify uses TDD for their payment processing, ensuring secure and reliable transactions. Netflix applies it to their recommendation algorithms, enabling continuous improvement and experimentation.

Actionable Tips for Implementing TDD

- Start Small: Begin with simple, focused tests covering individual units of code.

- Isolation is Key: Keep tests independent and avoid dependencies between them. This simplifies debugging and maintenance.

- Descriptive Test Names: Use clear test names that accurately describe the behavior being tested. This improves code readability and understanding.

- Regular Refactoring: Continuously refactor both your test and production code to maintain quality and avoid technical debt.

- Practice with Katas: Coding katas, like Uncle Bob's Bowling Game, offer a safe environment to practice TDD and build muscle memory.

Why and When to Use TDD

TDD is a valuable software testing best practice because it provides a safety net against regressions, improves code design, and reduces debugging time. It's particularly beneficial when working on complex projects, integrating new features, or refactoring existing code. While it might require an initial time investment, TDD ultimately leads to higher quality software and faster development cycles in the long run. Popularized by figures like Kent Beck, Robert C. Martin, and Martin Fowler, TDD is a cornerstone of modern software engineering practices.

2. Continuous Integration/Continuous Deployment (CI/CD) Testing

Continuous Integration/Continuous Deployment (CI/CD) testing represents a fundamental shift in how software is tested and delivered. It tightly integrates automated testing into the software delivery pipeline. Every code change triggers an automated build process, followed by a series of tests. This ensures defects are identified and addressed early, streamlining the development process and facilitating faster releases.

How CI/CD Testing Works

The CI/CD pipeline begins with developers committing code changes to a shared repository. The CI system automatically detects these changes and initiates a build process. This process compiles the code and runs a suite of automated tests, including unit, integration, and end-to-end tests. If all tests pass, the code is automatically deployed to a staging environment for further testing. Finally, if everything checks out in staging, the CI/CD system deploys the code to production.

Real-World Examples of CI/CD

Industry giants like Google, Facebook, and Amazon exemplify the power of CI/CD. Google runs millions of tests daily in their CI system, catching bugs before they reach users. Facebook deploys code thousands of times per day, maintaining rapid iteration and feature delivery. Amazon processes thousands of deployments daily through its sophisticated CI/CD pipeline.

Actionable Tips for Implementing CI/CD

- Keep Build Times Short: Aim for build and test times under 10 minutes to maintain a fast feedback loop.

- Cache Test Results: Implement test result caching to avoid redundant test runs and accelerate the pipeline.

- Use Feature Flags: Decouple deployment from release using feature flags. This enables controlled rollouts and reduces deployment risks.

- Monitor Test Flakiness: Track and fix unstable tests promptly. Flakiness erodes trust in the CI/CD process.

- Implement Rollback Mechanisms: Ensure you have a robust rollback mechanism in place. This allows quick recovery in case of production issues.

Why and When to Use CI/CD

CI/CD is essential for any team aiming to deliver software rapidly and reliably. It provides immediate feedback on code changes, reduces integration issues, and accelerates time-to-market. It is particularly beneficial for projects with frequent releases, large codebases, and distributed teams. CI/CD, championed by figures like Martin Fowler and the authors of the Continuous Delivery book (Jez Humble and Dave Farley), is a cornerstone of modern software development best practices. It streamlines the testing process, reduces risk, and allows teams to focus on delivering value to users.

3. Risk-Based Testing

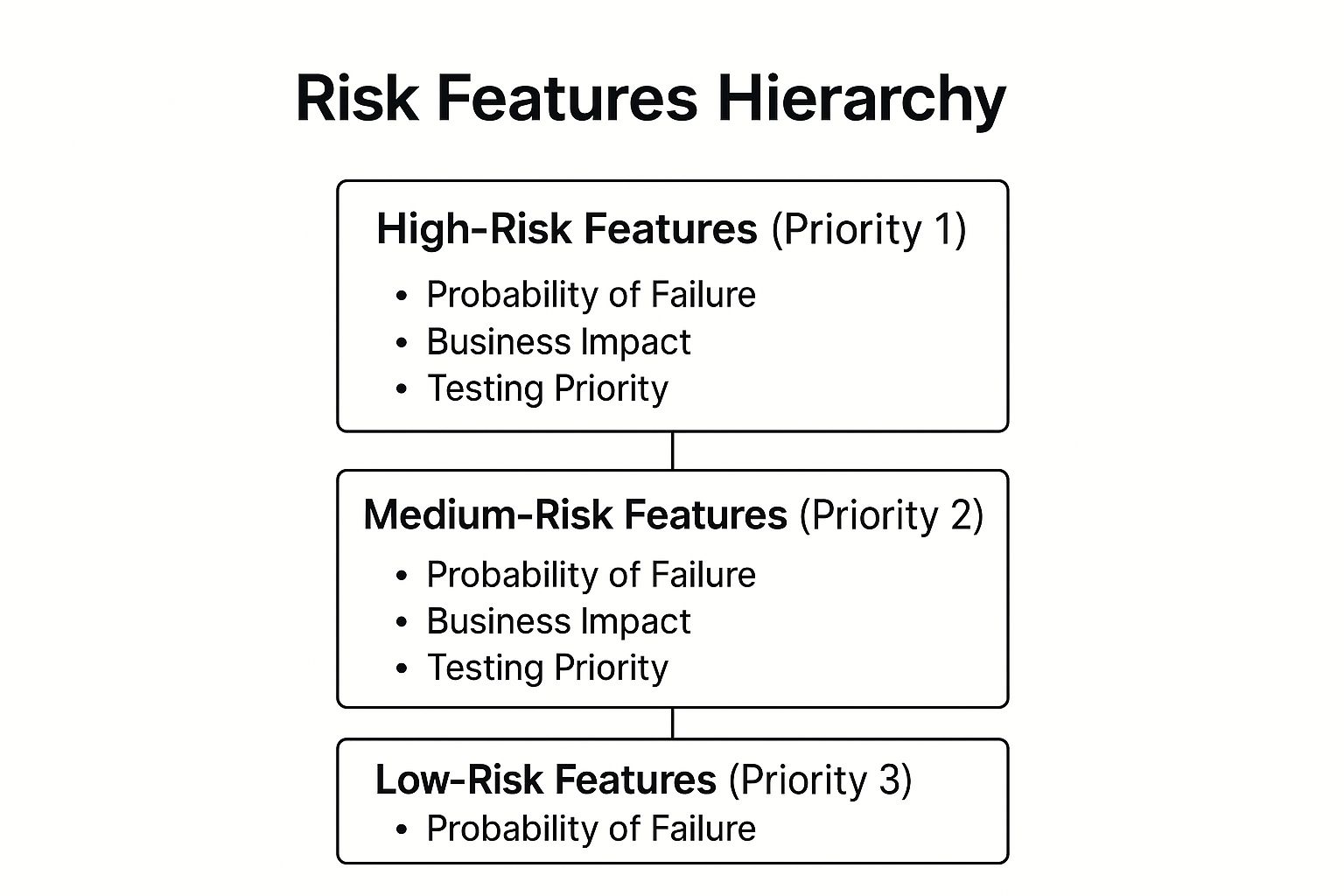

Risk-Based Testing (RBT) is a strategic approach to software testing that prioritizes testing efforts based on the risk assessment of different features, components, or areas of the application. Instead of treating all test cases equally, RBT focuses resources on areas with the highest probability of failure and the greatest potential impact on business operations. This ensures that critical functionalities are thoroughly tested, while less critical areas receive appropriate, but potentially less extensive, testing coverage.

The infographic above visualizes a sample risk assessment hierarchy, displaying how features are categorized based on their probability of failure and potential business impact. As shown, high-risk features like core payment processing are given top priority for testing, followed by medium and low-risk areas.

How Risk-Based Testing Works

RBT involves identifying potential risks, analyzing their likelihood and impact, and prioritizing testing efforts accordingly. This involves close collaboration between testers, developers, and business stakeholders to ensure a shared understanding of the application's critical functionalities and potential vulnerabilities.

Real-World Examples of Risk-Based Testing

Imagine a banking application. Payment processing is a high-risk area. A failure here could have severe financial consequences. Therefore, it receives the highest testing priority. UI themes, while important for user experience, carry a lower risk and are tested accordingly. Similarly, e-commerce platforms prioritize checkout and payment flows over less critical features like product recommendations. Medical device software emphasizes testing safety-critical functions above cosmetic elements.

Actionable Tips for Implementing Risk-Based Testing

- Involve Business Stakeholders: Engage business stakeholders early in the process to identify key business risks and priorities.

- Use Historical Data: Leverage past defect data to inform risk assessments. Areas with a history of bugs are likely to pose higher risks.

- Simple Scoring System: Develop a straightforward risk scoring system, often based on probability multiplied by impact.

- Regular Reviews: Review and update risk assessments periodically, especially after major releases or changes in business requirements.

- Document Decisions: Document risk assessments and decisions for future reference and traceability.

Why and When to Use Risk-Based Testing

Risk-Based Testing is a software testing best practice because it optimizes resource allocation, ensures critical functionalities are thoroughly tested, and reduces the overall cost and time required for testing. This approach is especially valuable when facing time constraints, limited resources, or when dealing with complex systems where exhaustive testing is impractical. Pioneered by experts like Rex Black and incorporated into the ISTQB Advanced Level syllabus, Risk-Based Testing provides a practical framework for effective software quality assurance. It's a cornerstone of efficient and effective software testing, particularly for startups and small businesses.

4. Test Automation Pyramid

The Test Automation Pyramid is a strategic framework that visualizes the ideal distribution of different types of automated tests. It guides teams to build a robust and efficient testing suite by prioritizing faster, more isolated tests at the base and minimizing slower, more complex tests at the top. This approach optimizes for speed, reliability, and maintainability, reducing overall testing costs and improving software quality.

How the Test Automation Pyramid Works

The pyramid consists of three layers: Unit, Integration, and UI/End-to-End. The base comprises numerous unit tests, verifying individual components in isolation. The middle layer contains fewer integration tests, checking interactions between different modules or services. The top layer has a small number of UI/End-to-End tests that validate the entire system from the user's perspective.

Real-World Examples of the Test Automation Pyramid

Google, Spotify, and Microsoft all utilize the Test Automation Pyramid principles. Google aims for a 70/20/10 split between unit, integration, and UI tests. Spotify employs this approach for their microservices testing strategy. Microsoft's .NET testing guidelines also recommend the pyramid approach.

Actionable Tips for Implementing the Test Automation Pyramid

- Aim for 70/20/10 Distribution: Target a distribution of approximately 70% unit tests, 20% integration tests, and 10% UI tests.

- Fast and Isolated Unit Tests: Write unit tests that execute quickly and are independent of external dependencies.

- Critical Integration Tests: Focus integration tests on crucial interactions between system components and external services.

- Focused UI Tests: Keep UI tests concise, concentrating on happy path scenarios and critical user flows.

- Regular Rebalancing: Periodically review and rebalance your test distribution as your system evolves.

Why and When to Use the Test Automation Pyramid

The Test Automation Pyramid is a valuable software testing best practice because it promotes a balanced and efficient testing strategy. This approach helps teams reduce testing time, improve test reliability, and minimize maintenance costs. It is particularly beneficial for complex projects with multiple components, frequent integrations, or a high volume of user interactions. Pioneered by Mike Cohn in his book Agile Testing and further popularized by Martin Fowler and the Google Test Engineering team, the Test Automation Pyramid provides a practical roadmap for effective automated testing. While the specific ratios can be adjusted based on project needs, the core principles remain crucial for building a robust and scalable testing suite.

5. Shift-Left Testing

Shift-left testing revolutionizes the traditional software testing approach. It advocates integrating testing activities much earlier in the software development lifecycle (SDLC). Instead of relegating testing to the end of the development cycle, shift-left testing embeds it throughout the entire process, from requirements gathering and design to coding and implementation. This proactive approach allows for the early detection and resolution of defects, significantly reducing costs and development time.

How Shift-Left Testing Works

Shift-left testing emphasizes continuous testing throughout the SDLC. Testers become active participants from the initial stages, collaborating with developers and business analysts. This collaborative approach ensures that quality is built into the product from the ground up, rather than being an afterthought. By identifying and addressing defects early, shift-left testing minimizes the ripple effect of bugs, preventing them from escalating into larger, more complex problems later on.

Real-World Examples of Shift-Left Testing

Many industry giants have successfully implemented shift-left testing. Microsoft integrates security testing from the design phase in its Security Development Lifecycle. IBM leverages DevOps practices to incorporate testing throughout the development process. Agile teams frequently employ "three amigos" sessions, bringing together developers, testers, and business analysts to discuss requirements and identify potential issues early on.

Actionable Tips for Implementing Shift-Left Testing

- Include Testers in Requirement Review Meetings: Engage testers from the outset to ensure testability is considered during requirement gathering.

- Implement Code Reviews with a Testing Perspective: Encourage developers to review code with a focus on testability and potential defects.

- Use Static Analysis Tools in Development Environments: Integrate static analysis tools to identify coding errors and vulnerabilities early in the development process.

- Create Testable Acceptance Criteria During Planning: Define clear and testable acceptance criteria to ensure alignment between requirements and testing efforts.

- Establish a Clear Definition of Done Including Testing Activities: Include testing as an integral part of the definition of done to ensure that all features are thoroughly tested before release.

Why and When to Use Shift-Left Testing

Shift-left testing is a crucial software testing best practice. It provides significant benefits in terms of reduced costs, faster development cycles, and improved software quality. By detecting defects early, it minimizes rework and prevents bugs from propagating through the system. This approach is particularly valuable for complex projects, projects with tight deadlines, and teams adopting Agile or DevOps methodologies. Shift-left testing, championed by figures like Larry Smith at IBM, has become integral to the DevOps movement and the Agile testing community. It represents a fundamental shift toward a more proactive and collaborative approach to software quality. By embracing shift-left testing, teams can deliver higher-quality software more efficiently and effectively.

6. Exploratory Testing

Exploratory testing is a dynamic and hands-on approach to software testing where test design and test execution happen concurrently. Unlike scripted testing, where testers follow pre-defined steps, exploratory testing empowers testers to actively explore the application, learning its intricacies while simultaneously designing and executing tests. This approach emphasizes the tester's skill, creativity, and intuition to uncover hidden defects that traditional scripted tests might miss. It's about discovery and learning in real-time.

How Exploratory Testing Works

Think of exploratory testing as a detective investigating a case. Testers don't have a fixed script; they follow clues, hunches, and their understanding of the software to uncover potential issues. They formulate hypotheses about the software's behavior and then design tests on-the-fly to validate those hypotheses. This allows for rapid adaptation to new information and a deeper understanding of the system's intricacies.

Real-World Examples of Exploratory Testing

Microsoft utilizes exploratory testing for usability testing of its Windows operating system, ensuring a smooth and intuitive user experience. Gaming companies rely heavily on exploratory testing to identify gameplay issues, balance challenges, and refine the user experience before release. Atlassian, known for its agile development practices, incorporates exploratory testing within its development cycles to enhance product quality and identify edge cases.

Actionable Tips for Implementing Exploratory Testing

- Time-Boxed Sessions: Conduct exploratory testing in focused sessions, typically 90-120 minutes long, to maximize concentration and effectiveness.

- Session Notes: Maintain detailed notes during sessions, capturing observations, test ideas, and discovered defects. These notes serve as valuable documentation for future reference.

- Specific Charters: Define specific charters or missions for each session to provide direction and focus. This helps testers concentrate their efforts on particular areas or functionalities.

- Combine with Scripted Tests: Exploratory testing complements scripted testing. Use it to discover new test cases that can then be formalized into automated scripts.

- Debriefing: Conduct post-session debriefings to share findings, discuss learning, and refine testing strategies. This collaborative approach enhances knowledge sharing and improves future testing efforts.

Why and When to Use Exploratory Testing

Exploratory testing is a valuable software testing best practice because it helps uncover critical defects that traditional methods might miss. It promotes a deeper understanding of the software and fosters critical thinking among testers. It's particularly useful for uncovering usability issues, testing complex user flows, and exploring edge cases. While it requires skilled testers, the benefits of exploratory testing in terms of improved software quality and enhanced user experience make it an essential part of a comprehensive testing strategy. Popularized by software testing experts like James Bach, Michael Bolton, Cem Kaner, and Elisabeth Hendrickson, exploratory testing remains a cornerstone of agile and adaptive testing methodologies. It brings a human-centric approach to software testing, leveraging intuition and experience to deliver high-quality, user-friendly products.

7. Behavior-Driven Development (BDD)

Behavior-Driven Development (BDD) elevates software testing by focusing on the user perspective. It extends Test-Driven Development (TDD) by using natural language to describe desired software behaviors. This collaborative approach bridges the gap between technical and non-technical stakeholders, ensuring everyone understands the software's purpose and functionality. BDD uses concrete examples, expressed in a common language, to drive the development and testing process.

How BDD Works: Collaboration and Concrete Examples

BDD revolves around defining user stories and acceptance criteria using a structured format, often employing the "Given-When-Then" framework.

- Given: Describes the initial context or preconditions.

- When: Specifies the action or event that occurs.

- Then: Outlines the expected outcome or result.

This structure creates clear, unambiguous examples that guide development and form the basis for automated tests. Tools like Cucumber and SpecFlow help translate these natural language scenarios into executable tests.

Real-World Examples of BDD

Companies like Spotify and the BBC utilize BDD for enhanced collaboration and quality. Spotify uses BDD with Cucumber for feature development, ensuring alignment between developers, testers, and product owners. The BBC employs BDD for its digital platform development, fostering a shared understanding of complex user journeys and improving communication across teams. Banking applications also leverage BDD for compliance and regulatory testing, ensuring adherence to strict requirements.

Actionable Tips for Implementing BDD

- Write Scenarios from the User Perspective: Focus on what the user wants to achieve, not how the system implements it.

- Keep Scenarios Focused and Specific: Each scenario should describe a single, well-defined behavior.

- Use Concrete Examples: Avoid abstract descriptions. Employ specific data values and expected results.

- Involve Business Stakeholders: Collaboration is key to BDD's success. Involve stakeholders in scenario creation and review.

- Regularly Review and Update Feature Files: Keep scenarios up-to-date with evolving requirements.

Why and When to Use BDD

BDD is a valuable software testing best practice because it fosters communication, reduces ambiguity, and ensures that software meets user needs. It's particularly useful in complex projects with diverse stakeholder groups, agile environments, and projects with a strong emphasis on user experience. While it requires a shift in mindset and collaboration, BDD leads to higher quality software, improved stakeholder satisfaction, and reduced rework. Popularized by Dan North, Aslak Hellesøy, and Gojko Adzic, BDD represents a significant step towards user-centric software development and is a powerful tool in any software testing arsenal. By focusing on the “behavior” from the user's perspective, BDD ensures that the final product delivers genuine value and addresses real-world needs, ultimately leading to a more robust and user-friendly software product.

8. API Testing Best Practices

Application Programming Interfaces (APIs) are the backbone of modern software, enabling different systems to communicate and exchange data. A comprehensive API testing strategy is crucial for ensuring software quality, reliability, and security. API testing focuses on validating the functionality, performance, and security of these interfaces at the service layer, without relying on the user interface. It confirms that APIs meet expectations for data exchange, error handling, and overall behavior.

How API Testing Works

API testing involves sending requests to an API endpoint and verifying the response. This includes checking the response status code, data format, payload content, and performance metrics. Different methods, such as GET, POST, PUT, and DELETE, are used to interact with the API and simulate various scenarios. Automated testing tools are commonly employed to streamline this process and enable continuous testing within CI/CD pipelines.

Real-World Examples of API Testing

Companies like Netflix extensively test their microservices APIs to ensure the reliability of their streaming platform. Twitter utilizes API testing to enforce rate limiting and maintain data consistency across their platform. Payment processors, like Stripe, implement comprehensive API testing to guarantee the security and integrity of financial transactions. These examples highlight the critical role of API testing in diverse software ecosystems.

Actionable Tips for Implementing API Testing

- Test Positive and Negative Scenarios: Validate expected behavior with positive tests and ensure proper error handling with negative tests.

- Schema Validation: Verify that API responses conform to the defined schema and data types.

- Thorough Error Testing: Test a wide range of error codes and messages to ensure comprehensive error handling.

- Boundary Value Testing: Test edge cases and boundary values to identify vulnerabilities and unexpected behavior.

- Contract Testing: Implement contract testing to verify interactions between dependent services.

- Test Data Management: Use effective test data management strategies to create realistic and reusable test data sets.

Why and When to Use API Testing

API testing is an essential software testing best practice, providing early detection of integration issues, improving security, and ensuring the reliability of data exchange between systems. It is especially valuable in microservices architectures, where multiple services interact via APIs. Employing API testing early in the development lifecycle reduces debugging time and contributes significantly to delivering robust and reliable software. This approach, influenced by the rise of RESTful API design principles and microservices architecture, is now a core component of DevOps and continuous testing practices.

9. Test Data Management

Test Data Management (TDM) is a systematic approach to creating, maintaining, and managing the data used throughout the software testing lifecycle. It encompasses strategies for data generation, data masking for privacy, data refresh mechanisms, and ensuring test data consistency across different testing environments. Effective TDM is crucial for maintaining data quality and compliance with regulations like GDPR and HIPAA. It also directly impacts the reliability and efficiency of your testing processes.

How Test Data Management Works

TDM involves several key processes. These processes include data generation, where realistic and representative data is created, whether synthetically or by subsetting production data. Data masking protects sensitive information by obfuscating or replacing real data with realistic but fictional substitutes. Data provisioning delivers the right data to the right environment at the right time, often through automated pipelines. Finally, data archiving and versioning ensure traceability and allow for easy rollback to previous data states.

Real-World Examples of Test Data Management

Many organizations rely heavily on robust TDM. Banking institutions use masked production data for testing while maintaining GDPR compliance. This allows them to test real-world scenarios without compromising customer privacy. E-commerce platforms generate synthetic customer data for load testing, simulating peak traffic conditions to ensure scalability. Healthcare applications use anonymized patient data for testing while maintaining HIPAA compliance, safeguarding patient privacy while ensuring software quality.

Actionable Tips for Implementing Test Data Management

- Implement automated data refresh processes: Automate the process of refreshing test data to ensure consistency and reduce manual effort.

- Use data generation tools: Leverage data generation tools for creating large volumes of realistic and diverse test data.

- Establish data governance policies: Define clear policies and procedures for data access, usage, and storage to maintain data quality and security.

- Create reusable data sets: Develop reusable data sets for common test scenarios to improve efficiency and reduce redundancy.

- Monitor data quality and consistency: Regularly monitor the quality and consistency of test data across different environments.

- Implement proper access controls and audit trails: Ensure proper access controls and maintain audit trails for all data-related operations.

Why and When to Use Test Data Management

TDM is a software testing best practice that becomes increasingly critical as data volume and regulatory requirements grow. It addresses the challenges of managing large and complex data sets, ensures data privacy, and improves the reliability of test results. It's particularly beneficial when dealing with sensitive data, performing performance and load testing, and automating testing processes. Investing in robust TDM practices ultimately leads to higher quality software and faster release cycles while mitigating the risks of data breaches and compliance violations. Popularized by enterprise testing frameworks and driven by the growing need for data privacy, TDM is now an integral part of DevOps and continuous testing adoption. Its effective implementation can significantly improve the efficiency and effectiveness of your software testing best practices.

9 Best Practices Comparison Matrix

| Item | 🔄 Implementation Complexity | ⚡ Resource Requirements | 📊 Expected Outcomes | 💡 Ideal Use Cases | ⭐ Key Advantages |

|---|---|---|---|---|---|

| Test-Driven Development (TDD) | Medium - requires discipline and practice | Moderate - requires test frameworks and developer time | High code quality and modularity, early regression detection | Suitable for critical, complex codebases benefiting from incremental development | Ensures high test coverage, reduces debugging, improves design |

| CI/CD Testing | High - significant initial setup and maintenance | High - infrastructure, automation tools needed | Faster releases, immediate feedback on commits | Continuous delivery environments, large-scale software projects | Immediate feedback, reduces manual testing, prevents broken code |

| Risk-Based Testing | Medium - needs risk assessments, domain expertise | Moderate - focused on critical areas only | Optimized test effort, better release decisions | Business-critical systems where testing resources are limited | Prioritizes critical features, improves ROI, aligns with business goals |

| Test Automation Pyramid | Medium - requires balanced test suite design | Moderate - emphasis on unit and integration tests | Faster, reliable testing with cost optimization | Projects needing reliable automated testing with maintainability | Fast feedback, lower maintenance, supports CI |

| Shift-Left Testing | Medium to High - cultural/process changes needed | Moderate - tooling and training required | Early defect detection, reduced defect fix cost | Agile, DevOps teams aiming for early quality assurance | Prevents defects early, enhances collaboration, speeds time-to-market |

| Exploratory Testing | Low to Medium - depends on tester skills | Low - manual effort driven by testers | Discovery of unexpected defects, quick feedback | Agile teams needing adaptability and scenario exploration | Uncovers edge cases, flexible, complements scripted tests |

| Behavior-Driven Development | Medium to High - scenario writing and collaboration | Moderate - tools for scenario automation | Improved communication, living documentation | Cross-functional teams requiring clear requirement understanding | Enhances communication, user-focus, reduces requirement misunderstandings |

| API Testing Best Practices | Medium - requires technical expertise, automation | Moderate to High - tools and environment setup | Reliable API functionality, faster testing independent of UI | Microservices, backend services, integration points | Tests core logic, faster than UI tests, language/platform independent |

| Test Data Management | High - complex setup and ongoing maintenance | High - storage, compliance tools, infrastructure | Consistent test results, compliance with data privacy laws | Environments with sensitive data or complex data needs | Ensures data privacy, scalable testing, reduces setup time |

Level Up Your Software Quality with These Best Practices

This article explored a range of software testing best practices, offering actionable insights for everyone from indie developers to large teams. We've covered key strategies that can drastically improve your software development lifecycle. Let's recap some of the most crucial takeaways.

Core Principles for Effective Software Testing

Remember the importance of integrating testing early and often. Shift-left testing, coupled with Test-Driven Development (TDD), helps prevent bugs early on, saving you time and resources down the line. Building a robust Test Automation Pyramid ensures efficient and comprehensive test coverage.

Strategic Approaches to Maximize Impact

Risk-based testing allows you to prioritize your testing efforts based on potential impact, maximizing your efficiency. Exploratory testing empowers testers to uncover unexpected issues, while Behavior-Driven Development (BDD) bridges the gap between technical and business stakeholders.

Optimizing Testing for Modern Development

In today's interconnected world, API testing is crucial. Understanding API testing best practices ensures the reliability of your software's core functionality. Effective test data management ensures reliable and consistent testing outcomes. Continuous Integration/Continuous Deployment (CI/CD) pipelines automate testing and deployment, accelerating your release cycles.

Putting it All Together for Superior Software

By implementing these software testing best practices, you're not just checking for bugs - you're building a culture of quality. These practices lead to more robust software, faster release cycles, and happier users. A proactive approach to testing isn't just a good idea - it's essential for success in today's competitive market.

Next Steps for Elevating Your Testing Strategy

- Assess your current testing process: Identify areas where you can incorporate these best practices.

- Start small and iterate: Choose one or two practices to implement first and gradually expand your testing strategy.

- Invest in training and tools: Equip your team with the knowledge and resources they need to succeed.

- Track your progress and measure results: Monitor the impact of these practices on your software quality and release cycles.

If you want to automate and optimize your mobile app testing process, check out Quash, an AI-powered mobile app testing solution that helps teams generate tests instantly, improve coverage, and ship with confidence.

Mastering these software testing best practices is an investment that pays dividends. By embracing a comprehensive and proactive testing approach, you'll deliver higher-quality software, increase customer satisfaction, and gain a competitive edge. Quality isn't just a feature - it's a foundation for success.

Streamline your testing documentation and knowledge sharing with Capacity. Centralize your testing processes, API documentation, and best practices in one easily accessible platform. Visit Capacity to learn more.